|

Q: Do we need more research? Why do we need clinical trials?

|

| |

|

| |

A: Medical research is the search for cures to illness and disease. It has been one of the most important human activities throughout history. There were two motives for research. Aside from the pure pursuit of knowledge for its own sake, research is linked to problem solving. What this means is the solving of other people’s problems. That is, what other people experience as problems. Clinical trials are essential to the development of new medical treatments and diagnostic tests. Without clinical trials, we cannot properly determine whether new treatments developed in the laboratory or by using animal models are effective or safe or whether a diagnostic test may work properly. This is because computer simulation and animal testing can only tell us so much about how a new treatment might work, and are no substitute for testing in a living human body.

Current evidence suggests that there is an association between the engagement of clinicians and healthcare organisations in research and improvements in healthcare performance. The improvement in healthcare performance ie. process of care and health outcomes of patients occurred even when these had not been the primary aims of the research. The mechanisms through which research engagement might improve healthcare performance overlap and rarely act in isolation, and their effectiveness often depends on the context in which they operate (please refer to the table below).

|

|

Broad impact

|

Specific impact

|

|

Clinicians

|

â–¸ Change in attitudes and behaviour that research engagement can promote

â–¸ Involvement in the processes of research

|

â–¸ Greater awareness and understanding of the specific research findings

â–¸ Increased relevance of the research

â–¸ Increased knowledge and understanding of the findings gained through participation in the research

â–¸ Clinician participation in research networks particularly effective when the science is changing rapidly and when keeping up-to-date is critical

|

|

Organization

|

â–¸ Use of the infrastructure created to support trials more widely, or for a longer period, to improve patient care

â–¸ Centres within networks build up a record of implementing research findings

â–¸ Network membership increases the likelihood of physicians recommending guideline concordant treatment

â–¸ Organisations affiliated to a network adopt an integrated, programmatic approach to

improving the quality of care, including the professional education, training and national meetings provided

|

â–¸ Applying the processes and protocols developed in a specific study (not counting any impact from regimens in the intervention arm) to all patients with specific illness, irrespective of their involvement in the trial

â–¸ The importance of effective collaboration and the need for a supportive context

|

Answers extracted from:

- From the NIH Director: The Value of Medical Research https://medlineplus.gov/magazine/issues/summer08/articles/summer08pg2-3.html

- A question universities need to answer: why do we research? http://theconversation.com/a-question-universities-need-to-answer-why-do-we-research-6230

- Why do we need clinical trials? https://www.australianclinicaltrials.gov.au/why-do-we-need-clinical-trials

- Boaz A, Hanney S, Jones T, Soper B. Does the engagement of clinicians and organisations in research improve healthcare performance: a three-stage review. BMJ Open. 2015 Dec 9;5(12):e009415. doi: 10.1136/bmjopen-2015-009415.

|

| |

|

| |

|

|

Q: Is 85% of health research really “wasted”? |

| |

|

| |

A: Let’s break up the 85% figure by its components. The easiest fraction to understand is the fraction wasted by failure to publish completed research. We know from follow up of registered clinical trials that about 50% are never published in full, a figure which varies little across countries, size of study, funding source, or phase of trial. If the results of research are never made publicly accessible- to other researchers or to end-users- then they cannot contribute to knowledge. The time, effort, and funds involved in planning and conducting further research without access to this knowledge is incalculable.

Publication is one necessary, but insufficient, step in avoiding research waste. Published reports of research must also be sufficiently clear, complete, and accurate for others to interpret, use, or replicate the research correctly. But again, at least 50% of published reports do not meet these requirements. Measured endpoints are often not reported, methods and analysis poorly explained, and interventions insufficiently described for others- researchers, health professionals and patients to use. All these problems are avoidable, and hence represent a further “waste.”

Finally, new research studies should be designed to take systematic account of lessons and results from previous, related research, but at least 50% are not. New studies are frequently developed without a systematic examination of previous research on the same questions, and they often contain readily avoidable design flaws. And even if well designed, the execution of the research process may invalidate it, for example, through poor implementation of randomization or blinding procedures.

Given these essential elements- accessible publication, complete reporting, good design- we can estimate the overall percent of waste. Let us first consider what fraction of 100 research projects DO satisfy all these criteria? Of 100 projects, 50 would be published. Of these 50 published studies, 25 would be sufficiently well reported to be usable and replicable. And of those 25, about half (12.5) would have no serious, avoidable design flaws. Hence the percent of research that does NOT satisfy these stages is the remainder, or 87.5 out of 100. In the 2009 paper (see below), this was rounded this down to 85%.

Although the data on which our estimates were based came mainly from research on clinical research, particularly controlled trials, the problems appear to be at least as great in preclinical research. Additionally, our 2009 estimate did not account for waste in deciding what research to do and inefficiencies in regulating and conducting research. These were covered in the 2014 Lancet series on waste (see below), but it is harder to arrive at a justifiable estimate of their impact.

Answers extracted from:

- Paul Glasziou and Iain Chalmers: Is 85% of health research really “wasted”? https://blogs.bmj.com/bmj/2016/01/14/paul-glasziou-and-iain-chalmers-is-85-of-health-research-really-wasted/

- Chalmers I, Glasziou P. Avoidable waste in the production and reporting of research evidence. Lancet. 2009 Jul 4;374(9683):86-9.

- Research: increasing value, reducing waste https://www.thelancet.com/series/research

|

| |

|

| |

|

|

Q: Does a poor medical research measured up to a scandal? |

| |

|

| |

A: What should we think about a doctor who uses the wrong treatment, either wilfully or through ignorance, or who uses the right treatment wrongly (such as by giving the wrong dose of a drug)? Most people would agree that such behaviour was unprofessional, arguably unethical, and certainly unacceptable. What, then, should we think about researchers who use the wrong techniques (either wilfully or in ignorance), use the right techniques wrongly, misinterpret their results, report their results selectively, cite the literature selectively, and draw unjustified conclusions? We should be appalled. Yet numerous studies of the medical literature, in both general and specialist journals, have shown that all of the above phenomena are common. This is surely a scandal. When people outside medicine were told that many papers published in medical journals are misleading because of methodological weaknesses they are rightly shocked. Huge sums of money are spent annually on research that is seriously flawed through the use of inappropriate designs, unrepresentative samples, small samples, incorrect methods of analysis, and faulty interpretation. Errors are so varied that a whole book on the topic, valuable as it is, is not comprehensive; in any case, many of those who make the errors are unlikely to read it.

Why are errors so common? Put simply, much poor research arises because researchers feel compelled for career reasons to carry out research that they are ill equipped to perform, and nobody stops them. Regardless of whether a doctor intends to pursue a career in research, he or she is usually expected to carry out some research with the aim of publishing several papers. The length of a list of publications is a dubious indicator of ability to do good research; its relevance to the ability to be a good doctor is even more obscure. A common argument in favour of every doctor doing some research is that it provides useful experience and may help doctors to interpret the published research of others. Carrying out a sensible study, even on a small scale, is indeed useful, but carrying out an ill designed study in ignorance of scientific principles and getting it published surely teaches several undesirable lessons. In many countries including Malaysia a research ethics committee has to approve all research involving patients. Although one of the recommended scientific criteria in the evaluation of research proposals is study validity, few ethics committees include an epidemiologist or a statistician. Indeed, many ethics committees explicitly take a view of ethics that excludes scientific issues. Consequently, poor or useless studies pass such review even though they can reasonably be considered to be unethical. The effects of the pressure to publish may be seen most clearly in the increase in scientific fraud, much of which is relatively minor and is likely to escape detection. The temptation to behave dishonestly is surely far greater now, when all too often the main reason for a piece of research seems to be to lengthen a researcher's curriculum vitae. There may be a greater danger to the public welfare from statistical dishonesty than from almost any other form of dishonesty. The poor quality of much medical research is widely acknowledged, yet disturbingly the leaders of the medical profession seem only minimally concerned about the problem and make no apparent efforts to find a solution. Manufacturing industry has come to recognise, albeit gradually, that quality control needs to be built in from the start rather than the failures being discarded, and the same principles should inform medical research. The issue here is not one of statistics as such. Rather it is a more general failure to appreciate the basic principles underlying scientific research, coupled with the “publish or perish” climate. As the system encourages poor research it is the system that should be changed. We need less research, better research, and research done for the right reasons. Abandoning using the number of publications as a measure of ability would be a start.

Answers extracted from:

- Altman DG. The scandal of poor medical research. BMJ. 1994 Jan 29;308(6924):283-4.

- Smith R. Their lordships on medical research. BMJ. 1995 Jun 17;310(6994):1552.

|

| |

|

| |

|

|

Q: Why most published research findings are false? How can we improve the situation? |

| |

|

| |

A: There is increasing concern that most current published research findings are false. The probability that a research claim is true may depend on study power and bias, the number of other studies on the same question, and, importantly, the ratio of true to no relationships among the relationships probed in each scientific field. In this framework, a research finding is less likely to be true when the studies conducted in a field are smaller; when effect sizes are smaller; when there is a greater number and lesser pre-selection of tested relationships; where there is greater flexibility in designs, definitions, outcomes, and analytical modes; when there is greater financial and other interest and prejudice; and when more teams are involved in a scientific field in chase of statistical significance.

Simulations show that for most study designs and settings, it is more likely for a research claim to be false than true. Moreover, for many current scientific fields, claimed research findings may often be simply accurate measures of the prevailing bias. A major problem is that it is impossible to know with 100% certainty what the truth is in any research question. In this regard, the pure “gold” standard is unattainable. However, there are several approaches to improve the post-study probability:

- Better powered evidence, e.g., large studies or low-bias meta-analyses, may help, as it comes closer to the unknown “gold” standard. Large-scale evidence should be targeted for research questions where the pre-study probability is already considerably high, so that a significant research finding will lead to a post-test probability that would be considered quite definitive. Large-scale evidence is also particularly indicated when it can test major concepts rather than narrow, specific questions. A negative finding can then refute not only a specific proposed claim, but a whole field or considerable portion thereof. Selecting the performance of large-scale studies based on narrow-minded criteria, such as the marketing promotion of a specific drug, is largely wasted research. One should be cautious that extremely large studies may be more likely to find a formally statistical significant difference for a trivial effect that is not really meaningfully different from the null.

- Diminishing bias through enhanced research standards and curtailing of prejudices may also help. In some research designs, efforts may also be more successful with upfront registration of studies, e.g., randomized trials. Registration would pose a challenge for hypothesis generating research. Regardless, even if we do not see a great deal of progress with registration of studies in other fields, the principles of developing and adhering to a protocol could be more widely borrowed from randomized controlled trials.

- Finally, instead of chasing statistical significance, we should improve our understanding of the range of R values—the pre-study odds—where research efforts operate. Before running an experiment, investigators should consider what they believe the chances are that they are testing a true rather than a non-true relationship. Speculated high R values may sometimes then be ascertained. Whenever ethically acceptable, large studies with minimal bias should be performed on research findings that are considered relatively established, to see how often they are indeed confirmed.

Answers extracted from:

- Ioannidis JP. Why most published research findings are false. PLoS Med. 2005 Aug;2(8):e124. Epub 2005 Aug 30.

- Goodman S, Greenland S. Why most published research findings are false: problems in the analysis. PLoS Med. 2007 Apr;4(4):e168.

- Ioannidis JP. Why most published research findings are false: author's reply to Goodman and Greenland. PLoS Med. 2007 Jun;4(6):e215.

|

| |

|

| |

|

|

Q: How to make more published research true? |

| |

|

| |

A: The achievements of scientific research are amazing. Science has grown from the occupation of a few dilettanti into a vibrant global industry with more than 15,000,000 people authoring more than 25,000,000 scientific papers in 1996–2011 alone. However, true and readily applicable major discoveries are far fewer. Many new proposed associations and/or effects are false or grossly exaggerated, and translation of knowledge into useful applications is often slow and potentially inefficient. Given the abundance of data, research on research (i.e., meta-research) can derive empirical estimates of the prevalence of risk factors for high false-positive rates (underpowered studies; small effect sizes; low pre-study odds; flexibility in designs, definitions, outcomes, analyses; biases and conflicts of interest; bandwagon patterns; and lack of collaboration). Currently, an estimated 85% of research resources are wasted. We need effective interventions to improve the credibility and efficiency of scientific investigation. Some risk factors for false results are immutable, like small effect sizes, but others are modifiable. We must diminish biases, conflicts of interest, and fragmentation of efforts in favor of unbiased, transparent, collaborative research with greater standardization. However, we should also consider the possibility that interventions aimed at improving scientific efficiency may cause collateral damage or themselves wastefully consume resources. To give an extreme example, one could easily eliminate all false positives simply by discarding all studies with even minimal bias, by making the research questions so bland that nobody cares about (or has a conflict with) the results, and by waiting for all scientists in each field to join forces on a single standardized protocol and analysis plan: the error rate would decrease to zero simply because no research would ever be done. Thus, whatever solutions are proposed should be pragmatic, applicable, and ideally, amenable to reliable testing of their performance.

To make more published research true, practices that have improved credibility and efficiency in specific fields may be transplanted to others which would benefit from them- possibilities include

- Adoption of large-scale collaborative research;

- Replication culture; registration; sharing; reproducibility practices;

- Better statistical methods;

- Standardization of definitions and analyses;

- More appropriate (usually more stringent) statistical thresholds;

- Improvement in study design standards, peer review, reporting and dissemination of research,

- Training of the scientific workforce on research quality and integrity in the whole research process;

- Modifications in the reward system for science, affecting the exchange rates for currencies (e.g., publications and grants) and purchased academic goods (e.g., promotion and other academic or administrative power) and introducing currencies that are better aligned with translatable and reproducible research.

The extent to which the current efficiency of research practices can be improved is unknown. Given the existing huge inefficiencies, however, substantial improvements are almost certainly feasible. The fine-tuning of existing policies and more disruptive and radical interventions should be considered with proper scrutiny and be evaluated experimentally. The achievements of science are amazing, yet the majority of research effort is currently wasted. Interventions to make science less wasteful and more effective could be hugely beneficial to our health, our comfort, and our grasp of truth and could help scientific research more successfully pursue its noble goals.

Answers extracted from:

- Ioannidis JP. How to Make More Published Research True. PLoS Med 11(10): e1001747.

|

| |

|

| |

|

|

Q: What factors that might increase quality of evidence in observational studies? Guideline developers’ perspectives. |

| |

|

| |

A: Although well done observational studies generally yield low quality evidence, in unusual circumstances they may produce moderate or even high-quality evidence.

Firstly, when methodologically strong observational studies yield large or very large and consistent estimates of the magnitude of a treatment effect, we may be confident about the results. In those situations, although the observational studies are likely to have provided an overestimate of the true effect, the weak study design is unlikely to explain all of the apparent benefit. The larger the magnitude of effect, the stronger becomes the evidence. For example, a meta-analysis of observational studies showed that bicycle helmets reduce the risk of head injuries in cyclists involved in a crash by a large margin (odds ratio 0.31, 95% confidence interval 0.26 to 0.37). This large effect suggests a rating of moderate quality evidence. A meta-analysis of observational studies evaluating the impact of warfarin prophylaxis in cardiac valve replacement found that the relative risk for thromboembolism with warfarin was 0.17 (95% confidence interval 0.13 to 0.24). This very large effect suggests a rating of high quality evidence.

Secondly, on occasion all plausible biases from observational studies may be working to underestimate the true treatment effect. For example, if sicker patients only receive an experimental intervention or exposure yet the patients receiving the experimental treatment still fare better, it is likely that the actual intervention or exposure effect is larger than the data suggest. For example, a rigorous systematic review of observational studies that included a total of 38 million patients found higher death rates in private for profit hospitals compared with private not for profit hospitals. Biases related to different disease severity in patients in the two hospital types, and the spillover effect from well insured patients would both lead to estimates in favour of for profit hospitals. Therefore the evidence from these observational studies might be considered as of moderate quality rather than low quality—that is, the effect is likely to be at least as large as was observed and may be larger.

Thirdly, the presence of a dose-response gradient may increase confidence in the findings of observational studies and thereby increase the assigned quality of evidence. For example, the observation that, in patients receiving anticoagulation with warfarin, there is a dose-response gradient between higher levels of the international normalised ratio and an increased risk of bleeding increases confidence that supratherapeutic anticoagulation levels increase the risk of bleeding.

Answers extracted from:

- Guyatt GH, Oxman AD, Kunz R, Vist GE, Falck-Ytter Y, Schünemann HJ; GRADE Working Group. What is "quality of evidence" and why is it important to clinicians? 2008 May 3;336(7651):995-8. doi: 10.1136/bmj.39490.551019.BE.

|

| |

|

| |

|

|

Q: What factors that might decrease quality of evidence in experimental studies? Guideline developers’ perspectives. |

| |

|

| |

A: The GRADE (Grading of Recommendations Assessment, Development and Evaluation) approach involves making separate ratings for quality of evidence for each patient important outcome and identifies five factors that can lower the quality of the evidence.

Study limitations/ risk of biases: Confidence in evidence quality and subsequent recommendations in clinical care decreases if studies have major limitations that may bias their estimates of the treatment effect. These limitations include lack of allocation concealment; lack of blinding, particularly if outcomes are subjective and their assessment highly susceptible to bias; a large loss to follow-up; failure to adhere to an intention to treat analysis; stopping early for benefit; or selective reporting of outcomes. For example, a randomised trial suggests that danaparoid sodium is of benefit in treating heparin induced thrombocytopenia complicated by thrombosis. That trial, however, was unblinded and the key outcome was the clinicians’ assessment of when the thromboembolism had resolved, a subjective judgment.

Inconsistent results: Widely differing estimates of the treatment effect (heterogeneity or variability in results) across studies suggest true differences in the underlying treatment effect. Variability may arise from differences in populations, interventions, or outcomes. When heterogeneity exists but investigators fail to identify a plausible explanation then the quality of evidence decreases. For example, the randomised trials of alternative approaches to the Whipple procedure yielded widely differing estimates of effects on gastric emptying, thus further decreasing the quality of the evidence.

Indirectness of evidence: Guideline developers face two types of indirectness of evidence. The first occurs when, for example, considering use of one of two active drugs. Although randomised comparisons of the drugs may be unavailable, randomised trials may have compared one of the drugs with placebo and the other with placebo. Such trials allow indirect comparisons of the magnitude of effect of both drugs. Such evidence is of lower quality than would be provided by head to head comparisons of the two drugs. The second type of indirectness includes differences between the population, intervention, comparator to that intervention, and outcome of interest, and those included in the relevant studies.

Imprecision: When studies include relatively few patients and few events and thus have wide confidence intervals a guideline panel judges the quality of the evidence to be lower because of resulting uncertainty in the results. For example, most of the outcomes for alternatives to the Whipple procedure include both important effects and no effects at all, and some include important differences in both directions.

Publication bias: The quality of evidence will be reduced if investigators fail to report studies they have undertaken. Unfortunately, guideline panels must often guess about the likelihood of publication bias. A prototypical situation that should elicit suspicion of publication bias occurs when published evidence is limited to a small number of trials, all of which are funded by industry. For example, 14 trials of flavonoids in patients with haemorrhoids have shown apparent large benefits. The heavy involvement of sponsors in most of these trials raises questions of whether unpublished trials suggesting no benefit exist.

Answers extracted from:

- Guyatt GH, Oxman AD, Kunz R, Vist GE, Falck-Ytter Y, Schünemann HJ; GRADE Working Group. What is "quality of evidence" and why is it important to clinicians? 2008 May 3;336(7651):995-8. doi: 10.1136/bmj.39490.551019.BE.

|

| |

|

| |

|

|

Q: What is the role of theory in research? |

| |

|

| |

A: The role of theory is to guide you through the research process. Theory supports formulating the research question, guides data collection and analysis, and offers possible explanations of underlying causes of or influences on phenomena. From the start of your research, theory provides you with a ‘lens’ to look at the possible underlying explanation of the relationships between the variables or factors under study.

In qualitative study, this ‘theoretical lens’ helps to focus your attention on specific aspects of the data and provides you with a conceptual model or framework for analysing them. It supports you in moving beyond the individual ‘stories’ of the participants. This leads to a broader understanding of the phenomenon of study and a wider applicability and transferability of the findings, which might help you formulate new theory, or advance a model or framework. Note that research does not need to be always theory-based, for example, in a descriptive study, interviewing people about perceived facilitators and barriers for adopting new behaviour.

Answers extracted from:

Korstjens I, Moser A. Series: Practical guidance to qualitative research. Part 2: Context, research questions and designs. Eur J Gen Pract. 2017;23(1):274–279. doi:10.1080/13814788.2017.1375090

|

| |

|

| |

|

|

Q: How are medical/clinical researches classified? |

| |

|

| |

A: Taxonomy and terms in describing clinical research are still lacking standard nomenclature and often counter-intuitive between the labels and their contents. For example, observational studies are contrasted to experimental studies, implying that experimental studies involve no observations or that observational studies exclude experimental element. Another example is the classification of non-experimental studies (observational) into either analytical or descriptive where the former involve comparison groups and the latter do not such as in case reports and case series. This causes confusion to users that as if the descriptive studies do not require analytical statistics or mind, or the analytical studies do not include descriptive statistics. The characteristics in certain study designs should best be understood before properly used to produce valid and precise outcomes.

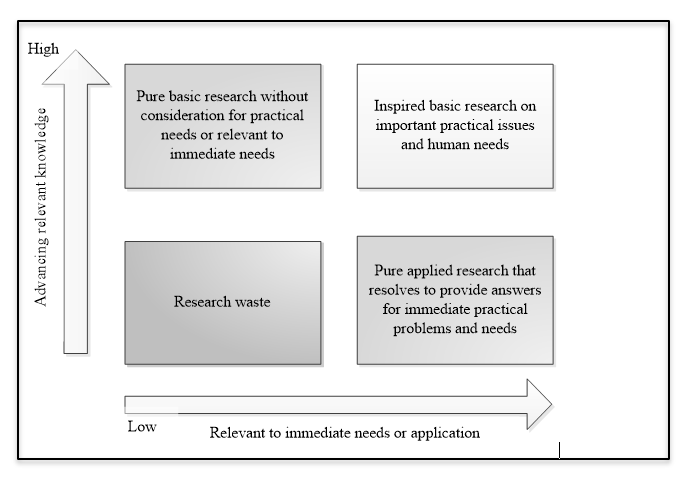

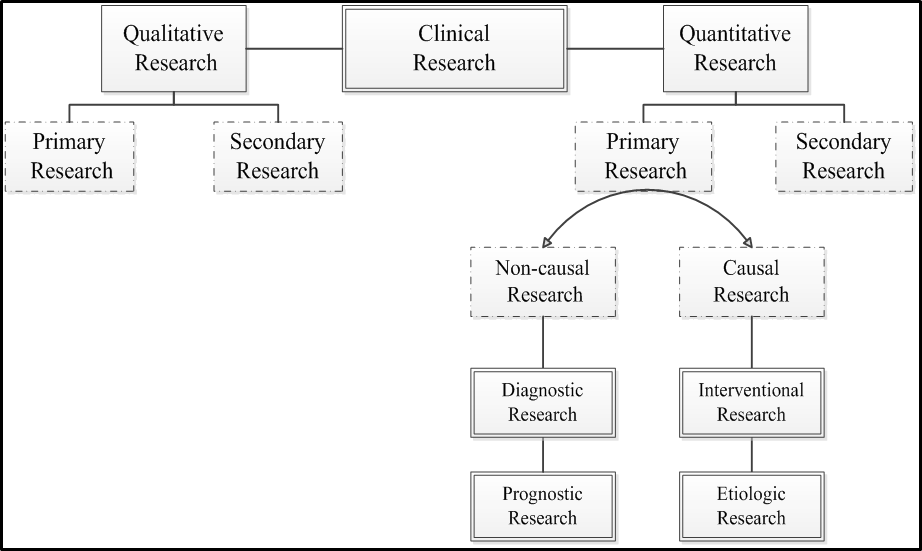

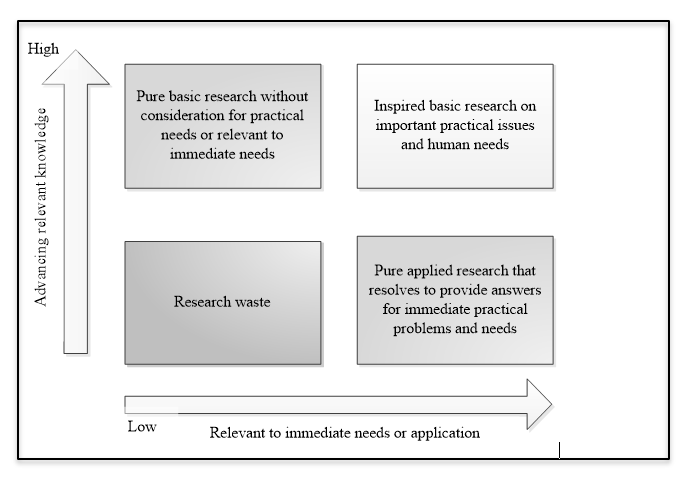

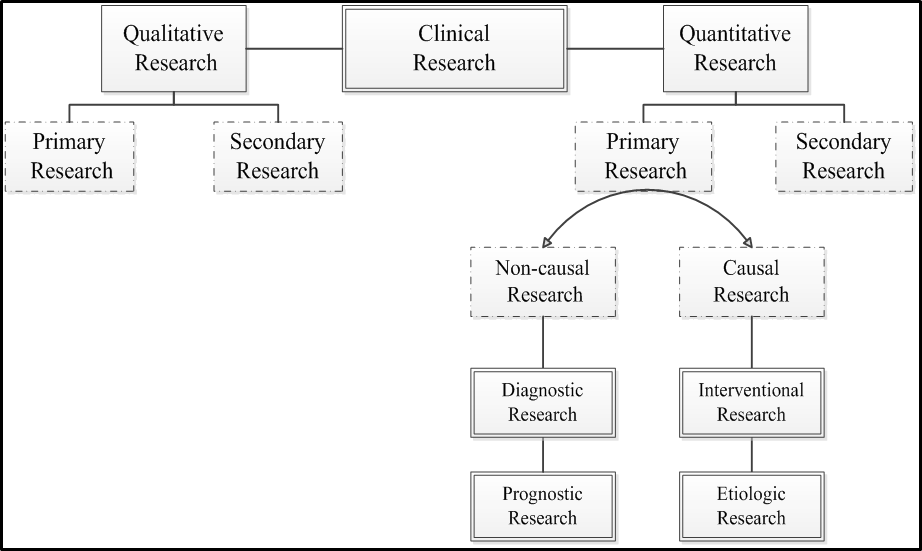

Medical and clinical research can be classified in many different ways. Probably most people are familiar with basic research, clinical research, health care (services) research, health systems (policy) research and educational research classification [1]. Stokes’ classification, was later modified by Chalmers et. al. [2] bases on axes of knowledge generation and application to immediate needs that result in four large quadrants of research categories (see the figure below). According to Grobbee and Hoes [3], clinical researches are best categorised as diagnostic, prognostic, interventional (for treatment effect) and etiologic; the first two is classified as non-causal research, while the other two are causal research (see another figure below). The non-causal researches (also commonly known as descriptive research) are usually represented by the diagnostic and prognostic researches, whereas the casual researches are the etiologic and interventional researches (see a table below for the examples). It can be understood then that prognostic and diagnostic researches are similar in many ways, essentially in confirming about the present of diseases or conditions; prognosis is making a diagnosis in a further future of time. The main different between diagnostic and prognostic research is that the former is cross-sectional and the latter is longitudinal. An etiologic research is concerned about finding the incidental cause/s for a health condition or disease, in contrast to an interventional research where the effect of a man-made intervention is examined.

|

Category of research

|

Example of the clinical research

|

|

Diagnostic

|

- Plasma concentration of B-type natriuretic peptide (BNP) in the diagnosis of left ventricular dysfunction

- The Centor and McIsaac scores and the Group A Streptococcal pharyngitis

- The Wells rule in deep venous thrombosis

|

|

Prognostic

|

- The Apgar score and infant mortality

- The Glasgow Coma Scale (GCS) in prediction of mortality in non-traumatic coma

- SCORE (Systematic COronary Risk Evaluation) for the estimation of ten-year risk of fatal cardiovascular disease

|

|

Intervention

|

- Dexamethasone to decrease chronic lung disease in very low birth weight infants

- Intravenous streptokinase for acute myocardial infarction

- Bariatric surgery vs non-surgical treatment of obesity in type 2 diabetes and metabolic syndrome

- Pap smear screening and changes in cervical cancer mortality

|

|

Etiologic

|

- Smoking and lung cancer

- Thalidomide and reduction deformities of the limbs

- Work stress and risk of cardiovascular mortality

|

|

Research category

|

Important features of the different categories of research

|

|

Objective

|

Issues in Design

|

Statistics

|

|

Diagnostic

|

Establishing diagnostic factors, the value of a new factor of test or scoring models for a condition

|

- Real practice variables

- Representative samples

- Cross-sectional

- A referent (gold) standard for the diagnosis

- Usually a dichotomous outcome

|

- Step-wise or ‘ENTER’ multivariable analysis with established determinants

- Penalization or shrinkage method

- Odds ratio

|

|

Prognostic

|

Establishing prognostic factors, the value of a new factor of test or scoring models for a condition

|

- Real practice variables

- Representative samples\

- Longitudinal

- A referent (gold) standard for the diagnosis of the outcome

|

- Multivariable survival analysis

- Calibration, discrimination, internal and external validation

- Hazard ratio

|

|

Intervention

|

Establishing the efficacy or effectiveness of an intervention or treatment compared to another accepted comparator or a control

|

- Comparability between groups by randomization

- Relevant samples

- Inherently prospective and longitudinal

- Blinding

|

- Adjustment for time factor and baseline imbalance in multivariable analysis

- Intention-to-treat and per-protocol analyses

|

|

Etiologic

|

Finding a cause or causes to a condition or disease

|

- Extraneous factors and all potential confounders are to be included and measured accurately

|

- Interaction of factors

- Relative risk

- Incidences (cumulative, density etc.)

|

Answers extracted from:

- Green LA: The research domain of family medicine. Ann Fam Med 2004, 2 Suppl 2:S23-29.

- Chalmers I, Bracken MB, Djulbegovic B, Garattini S, Grant J, Gulmezoglu AM, Howells DW, Ioannidis JP, Oliver S: How to increase value and reduce waste when research priorities are set. Lancet 2014, 383(9912):156-165.

- Grobbee DE, Hoes AW: Clinical Epidemiology: Principles, Methods, and Applications for Clinical Research: Jones & Bartlett Learning; 2014.

- Chew BH: Understanding and conducting clinical research - a clinical epidemiology approach by a clinician for clinicians: Serdang UPM, Malaysia; 2019.

|

| |

|

| |

|

|

Q: What are the fundamental concepts of clinical epidemiology in clinical research? |

| |

|

| |

A: Clinical epidemiology is a discipline that studies occurrences of diseases/health-related conditions in a specified population over a specified period of time in a clinical setting in an effort to quantify the occurrences. Within the term occurrence contains the whole spectrum of research methodologies, designs and statistical analyses/strategies. Epidemiology at its frontiers becomes more subjective and an art, than objective and science. Here is when we see the experts argue about the purpose of the methods and the sufficiency of the methods for the purpose. But there is always the best (not the perfect) methods to find the answers (not the truth).

The most important fundamental in quantitative research in clinical epidemiology is validity in measurements. The second fundamental issue is the sampling of sufficient number of participants. This is because statistical theories have proven that larger sample sizes that are sampled from a population have their findings that are closer to the population. On top of these essential characteristics, a research should be relevant to an existing clinical practice. In another word, reinventing the wheel is not detested when the wheel needs reconceptualization and improved function to cater for progresses in its associated systems and uses.

A true state of disease occurrence or relationship between two or more variables is believed to exist, and only begotten or close to if the study used proper designs and conducted soundly throughout the research process. Clinical epidemiology emphasizes on a research to produce valid and precise results not statistically significant results, closest possible to the truth. Validity of a study refers to absence of systematic error or the bias; and precision denotes that the accuracy of the results. Bias is best avoided using best possible designs, and further estimated in the statistical analyses because biases, if not avoided, are futile even impossible to be handled with the most advance statistical tests. Imprecision is caused by random error from data variability and often can be overcome with increased sample size. Therefore, a study can be designed to have most power in detecting a real difference in the population if it exists, by conducting a proper sample size estimation based on its hypothesis in theoretical design stage. Validity takes precedence over precision because without validity a research with high precision will cause precisely invalid outcome.

Validity of an instrument is defined as the degree of fidelity of the instrument measuring what it is intended to measure- that is, the results of the measurement correlate with the true state of an occurrence. Another widely used word for validity is accuracy. Internal validity refers to the degree of accuracy of a study’s results to its own study sample. Internal validity is influenced by the study designs. Whereas, the external validity refers to the applicability of a study’s result in other populations. External validity is also known as generalizability, expresses the validity of assuming the similarity and comparability between the study population and other populations. Reliability of an instrument denotes the extent of agreeableness of the results of repeated measurements of an occurrence by that instrument at different time, by different investigators or in different setting. Other terms that are used for reliability include reproducibility and precision (see the figure).

Answers extracted from:

- Rothman KJ: Epidemiology: An Introduction: Oxford University Press; 2012.

- Grobbee DE, Hoes AW: Clinical Epidemiology: Principles, Methods, and Applications for Clinical Research: Jones & Bartlett Learning; 2014.

|

| |

|

| |

|